According to a report by IDC, 82% of businesses using cloud services like AWS are concerned about optimizing their cloud infrastructure costs. To help companies reduce their spending, we will explore best practices for cost optimization when using AWS data analytics services.

Key Stats on AWS Data Processing Costs

- AWS services contribute to over 40% of Amazon’s total revenue.

- Companies can save up to 30% by optimizing their use of cloud computing resources, according to a report from the Gartner Group.

- AWS data processing costs can account for up to 50% of an organization’s cloud spending, making it one of the primary cost centers.

- 75% of AWS customers report not fully optimizing their cloud usage, which leads to unnecessary expenditures

With these figures in mind, it’s clear that cost optimization in AWS is not just a nice to have but a necessity for most companies.

Table of Contents

- Understanding AWS Data Analytics Services

- Why Cost Optimization Matters

- Best Practices for Cost Optimization on AWS Data Processing

- Partner with HashStudioz for AWS Data Analytics Services

- Conclusion

- Frequently Asked Questions (FAQs)

- 1. How can AWS data analytics services help businesses reduce costs?

- 2. What is the difference between Reserved Instances and Savings Plans on AWS?

- 3. How can I optimize data storage costs on AWS?

- 4. Can Spot Instances save money for AWS data processing workloads?

- 5. How do AWS Cost Explorer and Budgets help with cost optimization?

Understanding AWS Data Analytics Services

AWS offers a broad array of data analytics services that allow companies to perform everything from real-time data processing to large-scale batch analysis. Some of the most commonly used AWS data analytics services include:

- Amazon Redshift: A managed data warehouse solution for fast querying and analytics.

- AWS Glue: A serverless data integration service for ETL (Extract, Transform, Load) processes.

- Amazon Kinesis: A platform for real-time streaming data processing.

- Amazon Athena: An interactive query service that allows you to analyze data in Amazon S3 using standard SQL.

- Amazon EMR: A platform that allows companies to process vast amounts of data using Apache Hadoop and Spark.

While these services are powerful, they can also contribute to high operational costs if not properly managed. Implementing effective cost-cutting strategies is crucial to keeping cloud expenses under control.

Why Cost Optimization Matters

As of 2024, AWS continues to dominate the cloud market, with its annual revenue surpassing $100 billion, driven largely by businesses leveraging its data analytics and computing services. With more enterprises shifting to cloud-based infrastructures, cloud spending has become a critical financial consideration.

Effective cost optimization strategies can significantly impact a company’s bottom line. By implementing best practices—such as rightsizing instances, leveraging spot instances, and optimizing storage—businesses can reduce AWS costs by 30% to 50% without compromising performance or reliability.In an era where businesses face mounting pressure to maximize efficiency and profitability, proactive cloud cost management is no longer optional—it’s essential.

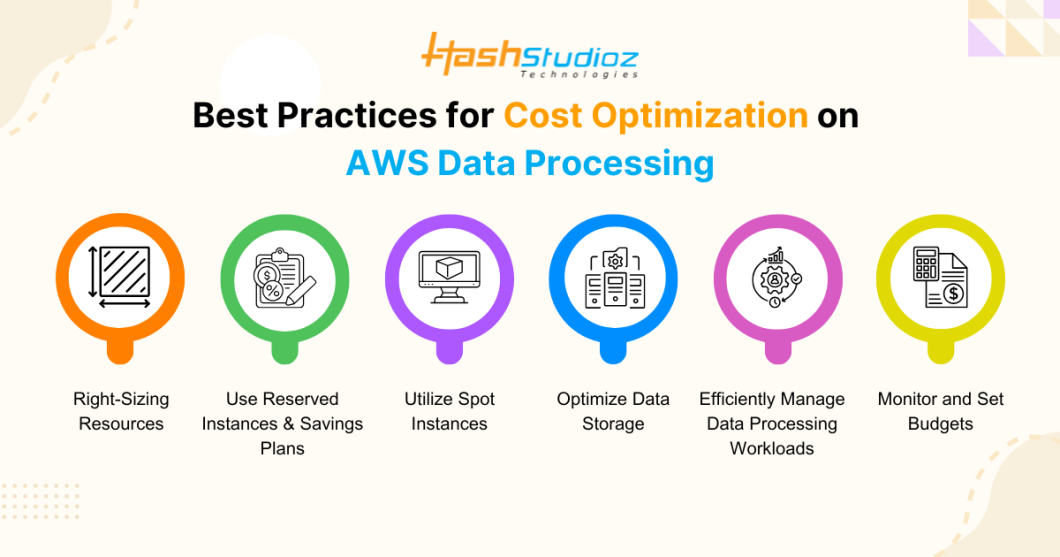

Best Practices for Cost Optimization on AWS Data Processing

1. Right-Sizing Resources

One of the most effective ways to cut costs is to right-size your AWS resources based on your actual usage. Many companies over-provision resources to avoid performance bottlenecks, but this often leads to wasted expenses.

- Right-Size EC2 Instances: Review your EC2 instances periodically and adjust their size based on workload requirements. AWS provides tools like Compute Optimizer to help you identify underutilized instances and recommend more cost-effective alternatives.

- Scale Down Unused Resources: Monitor unused or underutilized resources like idle EC2 instances or unnecessary storage in Amazon S3. If an instance isn’t being used, terminate it to avoid paying for unused resources.

Example:

A company using Amazon EC2 for big data processing can switch from on-demand instances to reserved instances for predictable usage, leading to substantial savings. In contrast, using spot instances for non-critical jobs like batch processing can reduce costs further.

2. Use Reserved Instances and Savings Plans

AWS offers several options for cost savings, including Reserved Instances and Savings Plans. Both options can offer significant discounts compared to on-demand pricing.

- Reserved Instances (RIs): By committing to a one- or three-year term for certain services (e.g., EC2, Redshift), companies can receive a discount of up to 75% compared to on-demand pricing. This is ideal for predictable workloads that run consistently over time.

- Savings Plans: AWS also offers Savings Plans, which provide flexible pricing options for compute services across AWS. Savings Plans are ideal for businesses with fluctuating workloads as they allow savings on both EC2 and Lambda usage, among others.

3. Utilize Spot Instances

For non-time-sensitive workloads, Spot Instances offer a way to significantly reduce costs. Spot Instances allow you to bid on unused EC2 capacity at a fraction of the regular price, with savings of up to 90%.

- Use Spot Instances for Batch Jobs: Companies with batch processing needs or data analytics workloads can use Spot Instances to handle large datasets without incurring high costs.

- Implement Auto Scaling: With Auto Scaling, you can automate the process of scaling your Spot Instances based on the demand. This ensures that you always have the necessary compute power while controlling costs.

4. Optimize Data Storage

Data storage costs can quickly spiral out of control if not optimized. AWS offers several storage options, and choosing the right one is crucial for cost savings.

- Use Amazon S3 Lifecycle Policies: Automate the movement of data between storage classes to optimize costs. For example, frequently accessed data can be stored in S3 Standard, while infrequently accessed data can be moved to S3 Glacier for long-term archival.

- Leverage Data Compression: Compress data before uploading it to S3 to reduce storage size and, in turn, reduce costs. AWS offers various compression tools to help with this process.

- Choose the Right Data Format: Store large datasets in columnar formats like Parquet or ORC, which can reduce both storage and query processing costs. These formats are optimized for analytics workloads, and tools like Amazon Athena and Amazon Redshift Spectrum can read them more efficiently.

5. Efficiently Manage Data Processing Workloads

Processing large amounts of data can lead to substantial costs, especially when it comes to the execution time of complex queries or data transformations. The following practices can help optimize processing costs:

- Leverage AWS Lambda: For smaller, serverless workloads, AWS Lambda is an efficient way to execute code in response to triggers without the need to provision or manage servers. Lambda functions are billed based on execution time, which allows for cost savings compared to traditional server-based compute models.

- Use AWS Glue for ETL Jobs: AWS Glue is a serverless ETL service that allows you to prepare and transform data without the need to manage infrastructure. AWS Glue can automatically scale your ETL jobs to fit the processing power needed, ensuring cost efficiency while handling large-scale data transformations.

- Optimize Query Performance: When using services like Amazon Athena or Redshift, optimize your queries to reduce the amount of data scanned. This can be achieved by partitioning your data effectively and using columnar storage formats.

6. Monitor and Set Budgets

AWS provides several tools to help track and manage your usage, ensuring you stay within budget.

- AWS Cost Explorer: Use Cost Explorer to track usage patterns and identify areas where you can cut costs. It provides visualizations that help you understand your spending trends.

- AWS Budgets: Set custom budgets and receive notifications when your usage or spending exceeds the budget threshold. This proactive approach helps companies avoid unexpected bills and provides greater control over cloud expenses.

Partner with HashStudioz for AWS Data Analytics Services

By partnering with HashStudioz, businesses can optimize AWS data processing costs, improve resource management, and gain insights into how to reduce wastage and avoid overspending. Their team of experts can help implement the best practices discussed in this article and design customized solutions tailored to your business’s unique needs.

Why HashStudioz?

HashStudioz is a leading AWS data analytics services company that helps businesses optimize their AWS infrastructure and reduce costs. By working with HashStudioz, you can gain valuable insights into how to manage your data analytics needs effectively while keeping your AWS costs under control.

Ready to take control of your AWS data processing costs? Reach out to HashStudioz today and start optimizing your cloud usage for maximum savings and performance.

Conclusion

Optimizing AWS data processing costs is essential for any organization looking to scale efficiently while controlling cloud expenses. By following best practices like right-sizing resources, using Reserved Instances and Savings Plans, leveraging Spot Instances, optimizing storage, and improving data processing efficiency, companies can significantly reduce their AWS costs.

Additionally, partnering with an AWS data analytics services company like HashStudioz can further enhance cost optimization and ensure your business is making the most of its cloud investments.

By implementing these strategies, businesses can keep their AWS bills under control while continuing to derive value from the powerful AWS data analytics services. Cost optimization is not just about cutting expenses—it’s about using AWS efficiently to unlock greater value and achieve long-term success.

Frequently Asked Questions (FAQs)

1. How can AWS data analytics services help businesses reduce costs?

AWS data analytics services provide businesses with powerful tools for processing and analyzing large amounts of data. By using services like Amazon Redshift, Athena, and Kinesis, companies can handle data analytics efficiently. These services help organizations scale their operations without having to manage infrastructure. Cost optimization is achieved by employing best practices such as right-sizing resources, utilizing Reserved Instances, and managing data storage effectively. These strategies ensure that businesses only pay for what they use, preventing over-provisioning and unnecessary expenses.

2. What is the difference between Reserved Instances and Savings Plans on AWS?

Both Reserved Instances (RIs) and Savings Plans offer cost-saving options on AWS, but they differ in flexibility:

- Reserved Instances (RIs): These require businesses to commit to using specific AWS services (e.g., EC2) for a one- or three-year term in exchange for discounted rates. They are ideal for predictable, long-term workloads.

- Savings Plans: More flexible than RIs, Savings Plans allow businesses to save money by committing to a specific amount of compute usage (e.g., EC2, Lambda) over one or three years. They apply to a broader range of services and can offer better savings for companies with dynamic usage patterns.

3. How can I optimize data storage costs on AWS?

There are several ways to optimize data storage costs on AWS:

- Use lifecycle policies in Amazon S3 to automatically move data to more cost-effective storage classes, such as S3 Glacier for archival data.

- Compress data before uploading to reduce storage size.

- Choose appropriate storage formats like Parquet or ORC, which optimize both storage and query performance, especially for data analytics workloads.

- Regularly clean up unused or obsolete data to avoid unnecessary storage costs.

By adopting these practices, companies can significantly reduce storage costs while maintaining efficient access to their data.

4. Can Spot Instances save money for AWS data processing workloads?

Yes, Spot Instances can save up to 90% compared to On-Demand instances. Spot Instances are ideal for non-critical, batch processing, and flexible workloads, as they allow companies to bid on unused EC2 capacity. While there is a possibility that AWS may terminate Spot Instances if the capacity is needed, the savings they offer can greatly reduce data processing costs. It is crucial to design workloads that are fault-tolerant and can handle interruptions if Spot Instances are used.

5. How do AWS Cost Explorer and Budgets help with cost optimization?

AWS Cost Explorer and AWS Budgets are two key tools for managing and optimizing AWS costs:

- AWS Cost Explorer: Provides detailed insights into your AWS usage and spending patterns. It allows you to visualize costs, identify trends, and detect areas where you can optimize spending. It is especially useful for tracking the effectiveness of your cost optimization efforts over time.

- AWS Budgets: Lets you set custom budgets for your AWS services and receive notifications when your costs exceed a certain threshold. This helps you monitor and control your cloud expenses, enabling proactive adjustments to avoid overspending.

Both tools are essential for continuously tracking and managing cloud costs, ensuring that your company stays within its budget while optimizing AWS usage.