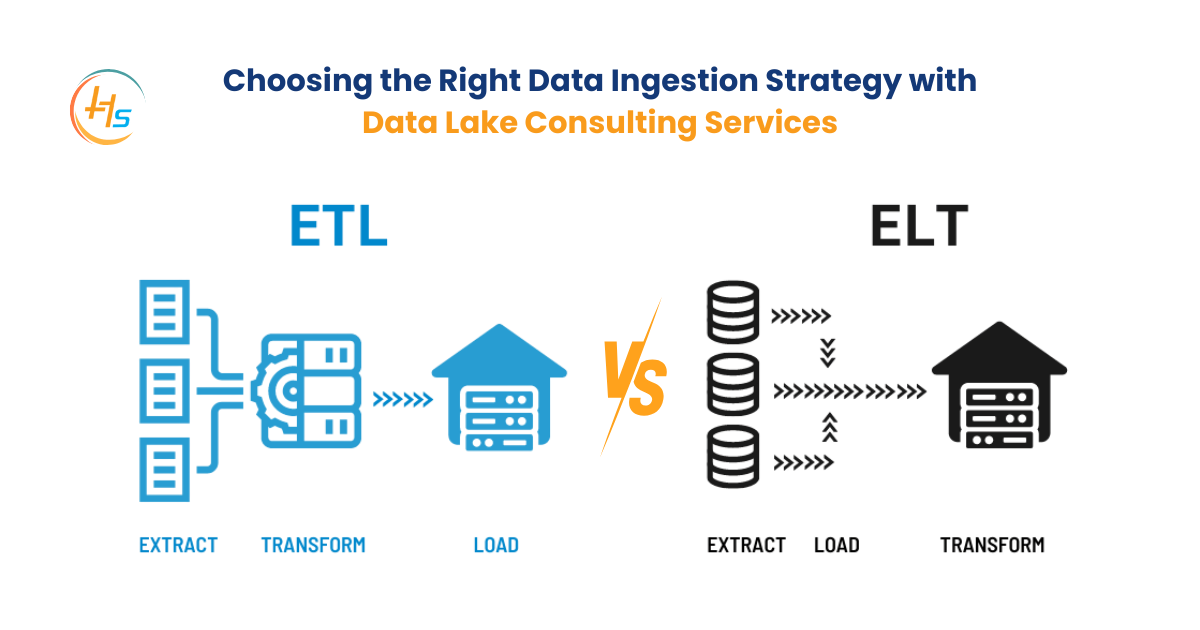

The digital age has led to an explosion of data, and organizations are constantly seeking ways to harness it effectively. Whether for business intelligence, analytics, or real-time decision-making, managing data efficiently is critical. This is where data ingestion strategies such as ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) come into play.

However, choosing the right approach is not always straightforward. Businesses must weigh factors like speed, transformation needs, scalability, and compliance before selecting ETL or ELT. This is where Data Lake Consulting Services play a vital role in guiding businesses toward the best data ingestion strategy.

- 90% of organizations say data lakes are critical for analytics.

- 60% of businesses are shifting from ETL to ELT for better performance.

- 80% of big data projects rely on ELT-based architectures.

Table of Contents

Understanding Data Ingestion

What is Data Ingestion?

Data ingestion is the process of collecting, importing, and processing data from various sources into a storage system, such as a data warehouse or data lake. The system analyzes this data to derive actionable insights that drive business intelligence, decision-making, and operational efficiency.

Organizations deal with vast amounts of data from multiple sources, including databases, IoT devices, APIs, social media platforms, and cloud applications. Data ingestion captures, stores, and makes this information available for further analysis.

Types of Data Ingestion Models

There are two primary models for data ingestion:

1. Batch Processing

- Data is collected and processed in groups (batches) at scheduled intervals.

- Commonly used for traditional data warehouses where real-time insights are not critical.

- Efficient for handling large amounts of historical data.

- Example: A retail company processing daily sales data at midnight for analytics.

2. Real-Time Processing

- Data is ingested and processed as it arrives, enabling immediate insights.

- Suitable for industries that require fast decision-making, such as finance, healthcare, and e-commerce.

- Uses streaming technologies like Apache Kafka and AWS Kinesis.

- Example: Fraud detection systems analyzing banking transactions in real-time.

Importance of Data Ingestion in Modern Businesses

Data ingestion is a critical component of modern data management strategies. Here’s why it matters:

- Enables Better Decision-Making – Well-organized and structured data provides accurate insights, helping businesses make informed decisions.

- Enhances Business Intelligence – Timely data ingestion ensures that analytics and reporting tools have access to up-to-date information, improving overall business intelligence.

- Improves Operational Efficiency – Automating data ingestion reduces the manual effort required to collect and process data, minimizing errors and enhancing productivity.

- Supports Big Data and AI Applications – Many AI and machine learning models rely on high-quality, continuously updated data, which is facilitated by effective data ingestion pipelines.

- Ensures Data Consistency and Compliance – Proper data ingestion mechanisms standardize data formats and enforce compliance with industry regulations.

What is ETL? (Extract, Transform, Load)

ETL (Extract, Transform, Load) traditionally moves data from multiple sources into a centralized system, such as a data warehouse or analytical storage. Businesses widely adopt this method for structured data processing, ensuring they clean, format, and optimize data before using it for business intelligence, reporting, and analytics.

How ETL Works

ETL follows a structured three-step process:

- Extract

- Data is extracted from various sources, including databases, APIs, CRM systems, cloud services, and log files.

- Ensures that data from disparate sources is collected efficiently.

- Can handle structured, semi-structured, and unstructured data, though primarily used for structured data.

- Transform

- Data is cleaned, formatted, and standardized to meet specific business requirements.

- Removes duplicate records, corrects inconsistencies, and ensures compatibility with target systems.

- Enhances data quality by applying business rules, aggregations, and validations.

- Load

- The transformed data is loaded into a target system, typically a data warehouse, relational database, or analytics platform.

- Data is structured and ready for querying, reporting, and advanced analytics.

Key Advantages of ETL

- Data Integrity and Quality – Since data is transformed before loading, it is well-structured, cleaned, and validated. This ensures high-quality datasets for decision-making.

- Compliance and Governance – ETL standardizes data formats and enforces compliance with GDPR, HIPAA, and other data regulations, making it ideal for industries with strict data governance policies.

- Optimized for Structured Data – ETL is well-suited for relational databases and structured datasets used in traditional business intelligence (BI) and reporting systems.

- Improves Performance – By transforming data before loading, ETL reduces the processing burden on the data warehouse, leading to faster query performance.

Common Use Cases of ETL

- Data Warehousing and Analytics – ETL is extensively used to integrate data from multiple sources into data warehouses like Amazon Redshift, Google BigQuery, and Snowflake for analytics and reporting.

- Regulatory Compliance and Reporting – Businesses in finance, healthcare, and government sectors use ETL to standardize and cleanse data to meet compliance requirements.

- Legacy System Integrations – Organizations migrating from legacy databases use ETL to extract, clean, and load historical data into modern data platforms.

ETL remains a powerful and reliable data ingestion approach for enterprises that require high-quality, structured, and governed data for decision-making. However, as data volume and speed requirements grow, ELT (Extract, Load, Transform) is emerging as a more flexible alternative for big data and cloud-based architectures.

What is ELT? (Extract, Load, Transform)

ELT (Extract, Load, Transform) is a modern data ingestion strategy where data is first extracted from various sources, then loaded into a data lake or cloud storage, and transformed later as needed. Unlike ETL, where transformation happens before loading, ELT leverages the computing power of cloud platforms and modern big data architectures to handle data transformation at scale.

How ELT Works

ELT follows a streamlined approach that benefits from cloud storage and computing capabilities:

- Extract

- Data is collected from multiple sources such as databases, SaaS applications, IoT devices, and streaming services.

- Similar to ETL, it can handle structured, semi-structured, and unstructured data.

- Load

- Raw data is loaded directly into a data lake (e.g., Amazon S3, Google Cloud Storage, or Azure Data Lake) or a cloud-based data warehouse (e.g., Snowflake, Google BigQuery, or Amazon Redshift).

- Since there is no pre-processing, data ingestion is faster than traditional ETL.

- Transform

- Data transformation occurs on-demand inside the storage system, meaning it is processed only when needed for analysis.

- Businesses can apply schema-on-read instead of schema-on-write, allowing for more flexibility in handling diverse data types.

- Uses cloud-based processing tools such as Apache Spark, Databricks, and SQL engines for large-scale transformations.

Key Advantages of ELT

- Scalability and Speed – Since transformation is postponed until after loading, ELT is faster and more scalable for large datasets, making it ideal for big data workloads.

- Better for Unstructured and Semi-Structured Data – ELT is more adaptable to JSON, XML, log files, images, videos, and IoT-generated data, unlike ETL, which works best with structured data.

- Cost-Effective for Big Data – By leveraging cloud-based storage and distributed computing, ELT reduces processing costs compared to on-premises ETL solutions that require expensive pre-processing.

- Optimized for Cloud Environments – ELT takes full advantage of serverless computing, parallel processing, and distributed storage, reducing infrastructure management efforts.

Common Use Cases of ELT

- Big Data Analytics and Machine Learning – Data scientists and analysts can query raw data and apply transformations as needed for predictive modeling and AI applications.

- Cloud-Based Data Lakes – ELT works seamlessly with modern data lake architectures, where businesses store massive amounts of raw, structured, and unstructured data for future analytics.

- IoT and Real-Time Data Processing – ELT efficiently ingests high-speed data from IoT sensors, connected devices, and real-time event streams, enabling fast analytics and decision-making.

ELT is gaining popularity with Data Lake Consulting Services, as businesses increasingly migrate to cloud-first architectures that demand greater flexibility, scalability, and cost-efficiency in data management.

ETL vs. ELT: A Detailed Comparison

Both ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) serve as data ingestion strategies, but they differ in execution, performance, and suitability for various data processing needs. Below is a detailed comparison of ETL and ELT based on key factors.

| Feature | ETL (Extract, Transform, Load) | ELT (Extract, Load, Transform) |

| Performance | Slower due to transformation before loading. Data must be structured before being stored, adding processing time. | Faster since data is loaded first and transformed only when needed, utilizing cloud and parallel processing capabilities. |

| Data Type | Best suited for structured data from relational databases and ERP systems. | Works well with structured, semi-structured, and unstructured data, including JSON, XML, IoT data, images, and videos. |

| Scalability | Limited scalability, as transformations occur on-premises or within traditional data warehouses. | Highly scalable in cloud environments, as data lakes and cloud storage allow massive datasets to be stored and processed efficiently. |

| Cost | Higher due to pre-processing before storage, requiring expensive ETL tools and on-premises infrastructure. | Lower due to cloud-based storage and processing, leveraging pay-as-you-go models that reduce infrastructure costs. |

| Compliance | Strong data governance and compliance enforcement since data is cleaned and validated before loading. | Requires additional governance measures, as raw data is stored before applying security, privacy, and compliance rules. |

| Data Processing Time | Takes longer as transformations occur before storage, requiring extensive data cleansing, mapping, and validation. | Faster since transformations occur post-loading, enabling real-time querying and analysis without initial delays. |

| Flexibility | Less flexible, as the schema must be predefined before data is loaded, limiting adaptability to new data formats. | More flexible, as transformations happen later, allowing schema-on-read and dynamic analysis. |

| Infrastructure Dependency | Often requires dedicated on-premises hardware and expensive ETL software to perform transformations before loading. | Primarily cloud-native, using serverless computing, distributed processing, and scalable storage solutions. |

| Use Case Suitability | Ideal for structured reporting, regulatory compliance, and traditional business intelligence (BI) tools. | Best for big data analytics, AI/ML applications, real-time processing, and cloud-based data lakes. |

| Data Latency | Higher latency due to batch processing, making it less suitable for real-time insights. | Lower latency, as data can be queried immediately in its raw form, with transformations applied as needed. |

| Tool Compatibility | Works with traditional ETL tools like Informatica, Talend, and IBM DataStage. | Compatible with modern cloud platforms like Snowflake, Databricks, BigQuery, and AWS Redshift Spectrum. |

Key Takeaways: Choosing Between ETL and ELT

Choose ETL if:

- Your organization primarily works with structured data in relational databases.

- You need strong data governance and compliance before loading the data.

- Your reporting and analytics rely on pre-processed, structured datasets.

- You have on-premises infrastructure with limited cloud adoption.

Choose ELT if:

- You deal with large volumes of semi-structured and unstructured data (IoT, logs, media files).

- You need real-time or near-real-time processing with lower latency.

- Your business operates in a cloud-native environment with scalable computing resources.

- Your focus is on big data analytics, machine learning, and AI-driven insights.

As businesses transition toward cloud-based data solutions, Data Lake Consulting Services play a crucial role in helping organizations adopt ELT strategies, enabling scalable, cost-effective, and real-time data processing solutions.

Choosing the Right Data Ingestion Strategy

Selecting between ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) depends on multiple factors, including data volume, compliance requirements, scalability, and business goals. Businesses must assess their data infrastructure and analytical needs to determine which approach aligns best with their objectives.

Factors to Consider When Choosing Between ETL and ELT

- Data Volume and Velocity

- ETL is effective for handling moderate to large structured datasets that require extensive cleansing and transformation before analysis.

- ELT handles massive volumes of unstructured and semi-structured data better, enabling businesses to store raw data and process it on demand.

- Compliance and Security Needs

- ETL enforces strong data governance and security by transforming and standardizing data before storage, making it ideal for industries with strict data protection regulations (e.g., healthcare, finance, and government sectors).

- ELT requires additional security measures because storing raw data before transformation increases the risk of compliance issues if not properly managed.

- Scalability and Infrastructure Costs

- ETL often requires on-premises servers or expensive ETL tools, leading to higher infrastructure costs and limited scalability.

- ELT leverages cloud computing and distributed processing, offering a cost-effective, scalable solution that grows with data demands.

- Business Objectives and Analytics Needs

- ETL supports structured, predefined analytics and reporting, making it suitable for organizations that rely on business intelligence (BI) dashboards and regulatory reporting.

- ELT is ideal for businesses focusing on big data, artificial intelligence (AI), machine learning (ML), and real-time analytics, as it allows for flexible querying and schema-on-read.

When to Choose ETL

- When businesses require structured, high-quality data – ETL cleans, organizes, and structures it before loading, making it ideal for business intelligence and reporting tools.

- For regulatory compliance and governance – Industries such as banking, healthcare, and legal sectors that must adhere to GDPR, HIPAA, or SOX benefit from ETL’s strict data validation and standardization.

- For traditional business intelligence (BI) and reporting – Organizations that rely on structured data warehouses for generating financial reports, operational KPIs, and trend analysis will find ETL a better fit.

When to Choose ELT

- When dealing with big data and machine learning – ELT can handle large, diverse data formats, making it ideal for data lakes, AI, and ML models that require raw and semi-structured data.

- For real-time data analytics – ELT is faster and more efficient for streaming data sources, such as IoT devices, log files, and social media feeds, as it allows instant data ingestion before transformation.

- When leveraging cloud data lakes – Organizations migrating to cloud-based storage solutions like Amazon S3, Google BigQuery, and Azure Data Lake benefit from ELT’s scalability, cost-effectiveness, and flexibility.

The Role of Data Lake Consulting Services in ETL and ELT

As businesses generate and process vast amounts of data, choosing the right data ingestion strategy (ETL vs. ELT) becomes a critical decision. Data Lake Consulting Services help organizations navigate this complexity by offering expert guidance, optimizing cloud-based infrastructure, and ensuring compliance with data governance standards.

How Data Lake Consulting Services Enhance Data Ingestion

1. Provide Expert Guidance on ETL vs. ELT

- Consultants assess an organization’s data architecture, analytics needs, and regulatory requirements to recommend the best ingestion strategy.

- They evaluate factors like data volume, latency, and transformation complexity to help businesses choose between ETL or ELT.

2. Optimize Cloud-Based Data Lakes for Efficiency

- Experts design and implement scalable data lake architectures that support ELT while ensuring cost efficiency and high performance.

- They help integrate cloud services such as AWS S3, Google Cloud Storage, and Azure Data Lake with big data processing frameworks like Apache Spark and Databricks.

3. Implement Automation for Real-Time Data Ingestion

- Data Lake Consulting Services automate ingestion pipelines using technologies like Apache Kafka, AWS Glue, and Snowflake to process data in real time.

- This ensures faster insights and supports real-time decision-making in industries such as finance, healthcare, and e-commerce.

Optimizing Performance with Expert Consulting

1. Identifying Bottlenecks in Data Pipelines

- Consultants analyze data workflows to detect inefficiencies in ETL and ELT processes, ensuring that data ingestion, storage, and processing run smoothly.

- They optimize query performance and data retrieval speeds using indexing, partitioning, and caching techniques.

2. Implementing the Best Storage and Processing Solutions

- Consulting services help select the right data storage models, such as columnar databases, object storage, or distributed file systems, based on business needs.

- They integrate serverless computing and distributed processing solutions, such as Google BigQuery, AWS Redshift, and Snowflake, for high-speed data transformation and analytics.

Ensuring Compliance and Security

1. Maintaining Data Privacy Regulations

- Compliance with GDPR, HIPAA, CCPA, and other regulations is a top priority. Consulting services implement data masking, anonymization, and retention policies to protect sensitive data.

2. Implementing Encryption and Access Control

- Role-based access controls (RBAC), multi-factor authentication (MFA), and data encryption strengthen data security to prevent unauthorized access.

- Consultants encrypt data in transit and at rest to mitigate breach and cyberattack risks.

Conclusion

Choosing between ETL and ELT depends on an organization’s data needs, infrastructure, and compliance requirements. ETL is best for structured data and compliance-driven industries, while ELT offers scalability and cost benefits for big data. Data Lake Consulting Services play a crucial role in optimizing data ingestion strategies, ensuring that businesses make the most of their data.

FAQs

1. What is the main difference between ETL and ELT?

The primary difference lies in the sequence of data processing:

- ETL (Extract, Transform, Load) transforms data before loading it into the storage system, ensuring structured, clean, and high-quality data.

- ELT (Extract, Load, Transform) first loads raw data into a data lake or cloud storage and then transforms it as needed, offering greater flexibility and scalability.

2. Which is better for big data analytics: ETL or ELT?

ELT is generally better for big data analytics because:

- It can handle large volumes of structured, semi-structured, and unstructured data.

- It leverages cloud-based data lakes, allowing for scalable, distributed processing.

- It enables real-time analytics and machine learning applications without the need for extensive pre-processing.

3. Can I use both ETL and ELT together?

Yes, many businesses adopt a hybrid approach that combines both ETL and ELT:

- ETL processes structured data requiring strict governance and compliance (e.g., financial reporting, regulatory requirements).

- ELT supports big data analytics, machine learning, and real-time processing by storing data first and transforming it later.

4. How can Data Lake Consulting Services help with ETL and ELT?

Data Lake Consulting Services play a critical role in optimizing data ingestion strategies by:

- Assessing business needs and recommending the most suitable approach (ETL or ELT).

- Designing scalable data pipelines that integrate with cloud-based data lakes.

- Automating data ingestion to ensure real-time or batch processing efficiency.

- Enhancing security and compliance, ensuring data governance meets industry standards.